Dr. Stephen Wittek, Postdoctoral Fellow, McGill

i. DREaM: Distant Reading Early Modernity

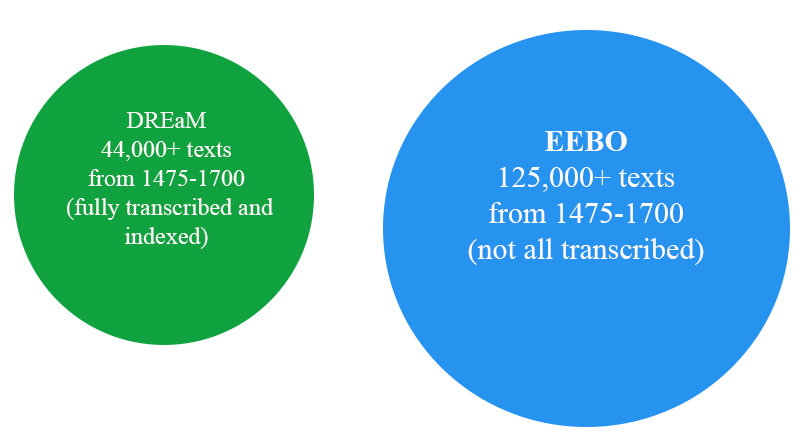

The DREaM Database is a collaborative digital humanities project that Stéfan Sinclair, Matt Milner, and I have been developing over the past year. When it is complete, it will make a massive corpus of some 44,000+ early modern English texts readily available for macro-scale textual analysis and visualization.

‘DREaM’ is an acronym for Distant Reading Early Modernity. As the title suggests, our approach derives from methodologies pioneered by Franco Moretti and his fellow researchers at the Stanford Literary Lab. Moretti coined the term ‘distant reading’ to describe computer-based techniques for analyzing large corpora. In Graphs, Maps, Trees, he argues that such techniques enable a bird’s-eye view of a corpus that conventional scholarly reading could never hope to achieve:

“A canon of two hundred novels, for instance, sounds very large for nineteenth-century Britain (and is much larger than the current one), but is still less than one per cent of the novels that were actually published: twenty thousand, thirty, more, no one really knows—and close reading won’t help here, a novel a day every day of the year would take a century or so.”

Notably, Moretti does not posit distant reading as a replacement for conventional scholarship, but as a complement. Matt Jockers makes this point explicit in a recent book entitled Macroanalysis: Methods in Digital Literary History.

“Macroanalysis [i.e., distant reading] is not a competitor pitted against close reading. Both the theory and the methodology are aimed at the discovery and delivery of evidence. This evidence is different from what is derived through close reading, but it is evidence, important evidence. At times, the new evidence will confirm what we have already gathered through anecdotal study. At other times, the evidence will alter our sense of what we thought we knew. Either way the result is a more accurate picture of our subject. This is not the stuff of radical campaigns or individual efforts to ‘conquer’ and lay waste to traditional modes of scholarship.”

Unlike EEBO, DREaM will enable mass downloading of custom-defined subsets rather than obliging users to download individual texts one-by-one. In other words, we have designed it to function at the level of ‘sets of texts,’ rather than ‘individual texts.’ Examples of subsets one might potentially generate include ‘all texts by Ben Jonson,’ ‘all texts published in 1623,’ or ‘all texts printed by John Wolfe.’ The subsets will be available as either plain text or XML encoded files, and users will have the option to automatically name individual files by date, author, title, or combinations thereof. They will also have the option to download subsets in the original spelling, or in an automatically normalized version.

The ability to generate custom-defined subsets is important because it will allow researchers to interrogate the early modern canon with distant reading tools such as David Newman’s Topic Modeling Tool or the suite of visualization tools available on Voyant-tools.org. By paving the way for these possibilities, DREaM will significantly expand the range and sophistication of technologies currently available to researchers who wish to gain a broad sense of printed matter in the period.

ii. Building the DREaM Database

Plans for the DREaM Database began when the directors of the Text Creation Project made the entire TCP corpus available to us following a request for access to raw materials. Although we were quite happy to have full command over such a vast collection of resources, it was immediately clear that we would have to do a great deal of organizing in order to prepare the corpus for the style of research we had hoped to carry out. The first, most obvious challenge concerned ‘metadata,’ which is a catchall term for all of the extra information that helps to keep documents organized: the author’s name, the date of publication, ISBN codes, etc. Following a protocol specifically designed for integration with EEBO, the TCP corpus stores metadata files and the files for transcribed texts in two separate sets of folders.

To understand the difficulty presented by this arrangement, imagine the TCP corpus as a pair of neighboring libraries. The first is a ‘Text Library’ and the second is a ‘Metadata Library.’ Upon entering the Text Library, one discovers a pile of 44,000 books stacked to the ceiling in a gigantic heap. None of the books have covers or title pages. The only item of identifying information is a code stamped on the spine that has no ostensible relevance or meaning—something along the lines of ‘A01046’ or ‘B02047.’ In order to discover the author or publication information for any given text, one must make note of the code and go to the neighboring Metadata Library, which contains a pile of 44,000 covers and title pages, each bearing a code that corresponds to a document in the Text Library next door.

Our first task, then, was to match all of the metadata files to the text files, thereby creating a new set of concatenated files that we could arrange within a searchable structure—a nice, well-organized library with shelves, catalogues, and helpful librarians to assist with queries.

Our next major challenge derived from complexities introduced by spelling variance.

Standardized spelling had yet to emerge in early modernity—writers had the freedom to spell however they pleased. To take a famous example, the name ‘Shakespeare’ has eighty different recorded spellings, including ‘Shaxpere’ and ‘Shaxberd.’ As one might imagine, variance on this scale presents a serious challenge for large-scale textual analysis. How is it possible to track the incidence of a specific word, or group of words, if any given word could have an unknown multiplicity of iterations?

To address this problem, Dr. Alistair Baron at Lancaster University in the UK has developed VARD 2, a tool that helps to improve the accuracy of textual analysis by finding candidate modern form replacements for spelling variants in historical texts. As with conventional spell-checkers, a user can choose to process texts manually (selecting a candidate replacement offered by the system), automatically (allowing the system to use the best candidate replacement found), or semi-automatically (training the tool on a sample of the corpora).

After some preliminary training, we ran the TCP corpus through VARD 2 using the default settings (auto normalization at a threshold of 50%). Rather than using the ‘batch’ mode—which proved unreliable for such a big job—we wrote a script that normalized the texts on a one-by-one basis. This process took about three days. VARD 2 normalized 80,676 terms for a grand total of 44,909,676 changes overall. Click here to download the full list.

A careful check through the list found that 373 of the term normalizations were problematic in one way or another. The problematic normalizations amounted to 462,975 changes overall—only 1.03% of the total number of changes. These results were satisfactory: our goal was not to make the corpus ‘perfectly normalized’ (an impossibility), but, more pragmatically, to make it ‘more-or-less normalized,’ which is the best one can reasonably expect from an automatic process. On this point, it is important to note that VARD encodes a record of all changes within the output XML file, so scholars will be able to see if the program has made an erroneous normalization.

Some of the problematic VARD normalizations seem to have derived from a dictionary error. For example, ‘chan’ became ‘champion’ and ‘ged’ became ‘general.’ In other instances, the problematic normalizations were ambiguous or borderline cases that we preferred to simply leave unchanged. Examples include ‘piece’ for ‘peece,’ and ‘land’ for ‘iland.’ There were also cases where the replacement term was not quite correct: ‘strawberie’ became ‘strawy’ rather than ‘strawberry,’ and ‘hoouering’ became ‘hoovering’ rather than ‘hovering.’ We fixed all of these little kinks by making adjustments to the VARD training file and running the entire corpus through the normalization process a second time. (Click here to download a spreadsheet showing the problematic normalizations and our proposed fixes.)

Of course, it is not difficult to imagine scenarios wherein a researcher may prefer to work with original spellings rather than normalized texts. With such projects in mind, we have planned to include normalized and non-normalized versions of the TCP corpus in DREaM.

Click here to see the demo video for the DREaM project.

Click here to see the DREaM internship reports from Winter 2015.